To gather data about the universe they study, scientists need tools to see in ways that go beyond the powers of human sight. The crude optical microscopes and x-ray tubes of the past could see only dimly and imperfectly compared to the modern instruments that scientists use to probe inorganic and organic matter from its outside veneer down to the level of individual atoms. The current generation of x-ray synchrotron facilities boasts a dazzling array of instruments, detectors, and techniques of exquisite sensitivity and capacities, and the next generation promises to increase those capabilities by orders of magnitude.

It all sounds like an indisputably positive state of affairs, but ironically, there is a down side. As our scientific tools become ever more sensitive and powerful, able to gather ever increasing amounts of raw data, the need to process and analyze all that data also increases exponentially. The ubiquitous digitization of scientific data does not help the problem: while digitization makes it easier than ever to collect and store information, it has also led to proliferation of different and not always compatible software and computing resources among different research facilities. As new synchrotron facilities come online, this dilemma will only increase.

To tame this ever-growing tsunami of data, a team of researchers at the U.S. Department of Energy’s Advanced Photon Source, an Office of Science user facility, is developing a collaborative framework for the analysis, processing, and reconstruction of tomographic datasets from diverse synchrotron sources. Based on the Python programming language, the TomoPy platform is intended to provide a common means for researchers to share, integrate, and use data collected at different facilities.

Along with the growing number of synchrotron x-ray sources inevitably comes a somewhat greater diversity in methods and techniques. Facilities naturally tend to rely upon and use data analysis tools and software they have developed with an eye to the specific characteristics and capabilities of their own instruments. But these may not be quite fully compatible with software used at other locations when attempting to share or integrate datasets and results. There is also a growing need for the flexibility of doing data processing either in real time at the synchrotron site or at other times at off-site locales. TomoPy addresses all of these issues and others by utilizing a modular approach that manages to be both general enough to work for various sites and flexible enough to be adaptable for local site preferences and needs.

In their paper, published in the Journal of Synchrotron Radiation, the authors demonstrate the utility of the TomoPy model by showing how the process of tomographic data analysis can be divided into a series of sequential steps, grouping or "modularizing" them into a chain of related major tasks that can be branched off into sub-tasks specific to particular tomographic types, such as x-ray transmission tomography, x-ray fluorescence microscopy, or x-ray diffraction tomography. As an example, the researchers examine how x-ray transmission data passes through the TomoPy framework from acquisition to final image display.

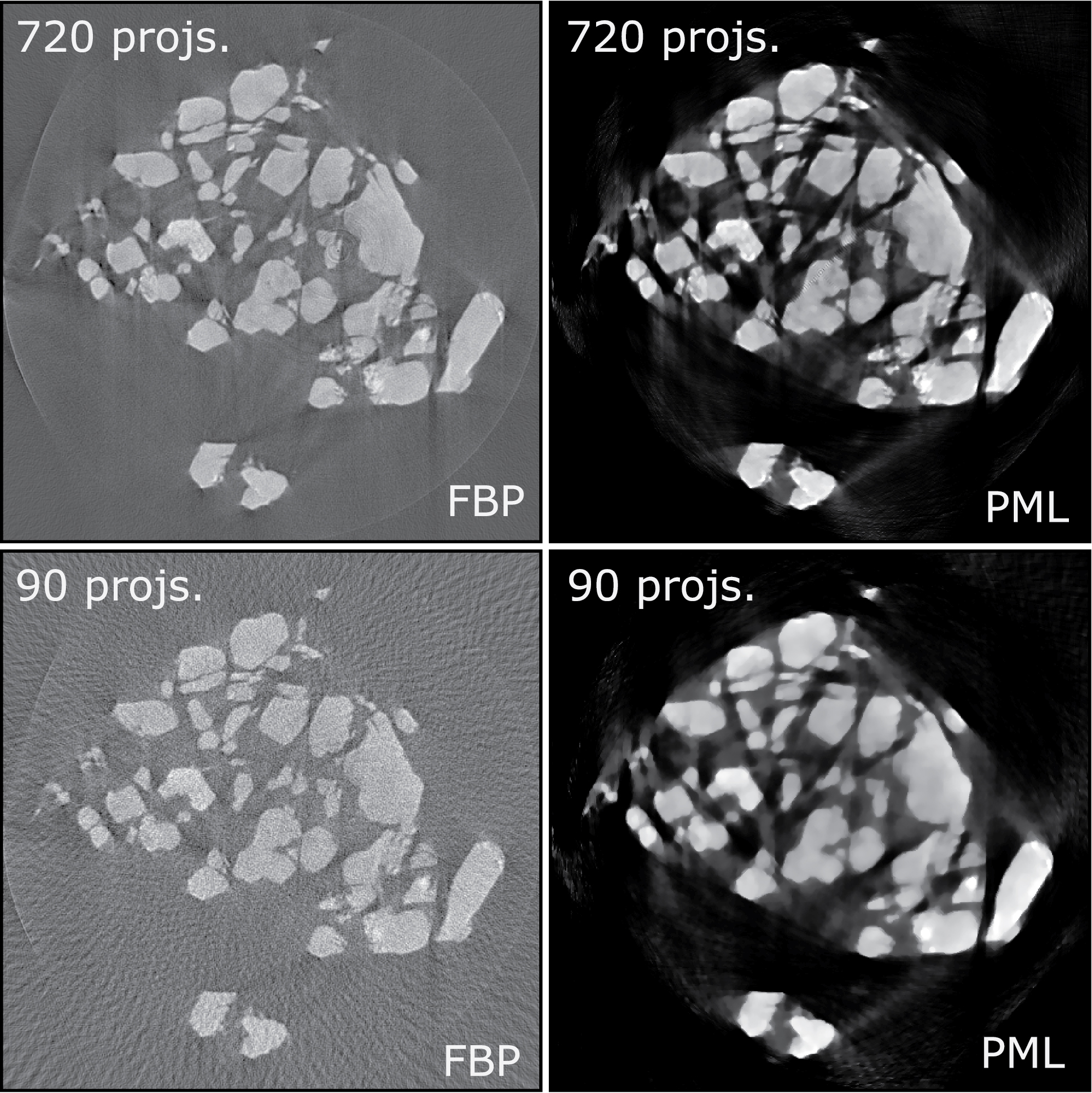

The first main module in the TomoPy model is pre-processing, involving matters such as data normalization of raw data (e.g., dark-field and white-field adjustments, artifact removal, compensating for insufficient field of view). Next comes reconstruction, in which the raw corrected data is mapped into image space. TomoPy uses the Fourier-based Gridrec method by default for this task, chiefly for its computational speed. However, the TomoPy reconstruction module can also employ iterative model-based inversion techniques, which can be useful particularly in cases where few projections are available or the data is of low signal-to-noise ratio. The final post-processing module of TomoPy allows for whatever further processing or imaging steps may be needed or desired, including region segmentation or quantitative analysis of the images (see the figure).

The basic modular strategy of TomoPy allows processing methods that are common across different tomographic techniques to be efficiently shared and executed concurrently and in parallel by multiple processor cores, saving time and using available computer resources to their best capabilities. The TomoPy framework is open-source and designed to be platform- and data-format independent, and while presently implemented for CPU and GPU computing, can easily be integrated with large scale computing facilities.

As our scientific instruments continue to become ever more powerful, the TomoPy framework is intended to unify and streamline the analysis and sharing of the harvest of data they provide. By taking advantage of faster and more efficient computing technology, increasingly sophisticated software, and the collaboration of physicists and mathematicians to create new mathematical methods, the designers of TomoPy hope to enhance the ability of scientists to use their ever-improving tools to their ultimate capacities.— Mark Wolverton

See: Doga Gürsoy*, Francesco De Carlo, Xianghui Xiao, and Chris Jacobsen, “TomoPy: a framework for the analysis of synchrotron tomographic data,” J. Synchrotron Rad. 21, 1188 (2014). DOI: 10.1107/S1600577514013939

Author affiliation: Argonne National Laboratory

Correspondence: * dgursoy@aps.anl.gov

This work is supported by the U.S. Department of Energy Office of Science under Contract No. DE-AC02-06CH11357.